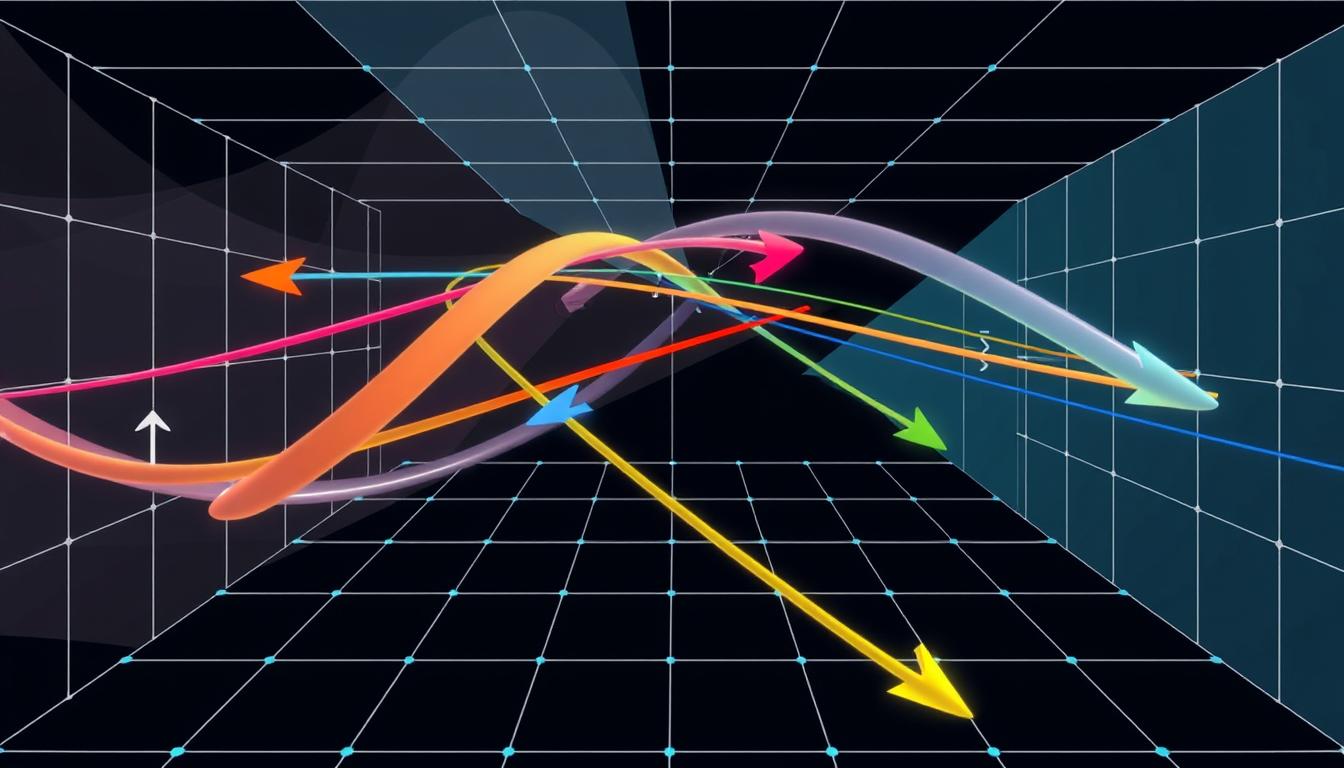

Vector spaces, a fundamental concept in linear algebra, have a profound impact on our understanding of geometry and the development of various algorithms. This article will delve into the essential characteristics of vector spaces, their axiomatic approach, and their crucial role in the field of linear algebra.

Vector spaces are mathematical structures that comprise a set of vectors, along with two operations: vector addition and scalar multiplication. These structures possess unique properties that make them invaluable in the study of linear algebra, geometry, and the design of efficient algorithms.

Key Takeaways

Contents

- 1 Introduction to Vector Spaces

- 2 Axiomatic Approach to Vector Spaces

- 3 Vector Space Operations

- 4 Subspaces and Their Properties

- 5 Linear Combinations and Spanning Sets

- 6 Vector Spaces and Linear Transformations

- 7 Vector Spaces in Real-World Applications

- 8 Vector Spaces and Matrices

- 9 FAQ

- 9.1 What is a vector space?

- 9.2 Why are vector spaces important in linear algebra?

- 9.3 What is the axiomatic approach to defining vector spaces?

- 9.4 What are the main operations in vector spaces?

- 9.5 What are subspaces, and how are they defined?

- 9.6 What are linear combinations and spanning sets in vector spaces?

- 9.7 How are vector spaces related to linear transformations?

- 9.8 What are some real-world applications of vector spaces?

- 9.9 How are vector spaces related to matrices?

- Vector spaces are mathematical structures that underpin our understanding of geometry and the development of algorithms.

- They are defined by a set of vectors and two operations: vector addition and scalar multiplication.

- Vector spaces exhibit specific characteristics, such as closure, associativity, and the existence of a zero vector and additive inverses.

- The axiomatic approach to vector spaces provides a rigorous framework for their study and application.

- Vector spaces play a crucial role in linear algebra, allowing for the analysis of systems of linear equations and the transformation of geometric objects.

Introduction to Vector Spaces

Vector spaces are fundamental mathematical structures that lie at the heart of linear algebra. These versatile constructs have a wide range of applications, from computer graphics to quantum mechanics. Understanding the definition of vector spaces and their characteristics is crucial for exploring the importance of vector spaces in linear algebra.

Definition and Characteristics

A vector space is a set of objects, called vectors, that can be added and multiplied by scalars (real or complex numbers) while satisfying certain axioms. These axioms ensure that the operations of vector addition and scalar multiplication behave in a consistent and predictable manner. The definition of vector spaces encompasses the essential properties that distinguish these structures from other mathematical systems.

- Closed under vector addition: The sum of any two vectors in the space is also a vector in the same space.

- Closed under scalar multiplication: The product of a vector and a scalar is also a vector in the same space.

- Existence of zero vector: There is a unique vector, called the zero vector, that serves as the identity element for vector addition.

- Existence of additive inverses: For every vector in the space, there exists a unique vector that, when added to the original vector, results in the zero vector.

Importance in Linear Algebra

The importance of vector spaces in linear algebra cannot be overstated. These mathematical structures provide the foundation for many of the fundamental concepts and techniques in the field. Vector spaces are the natural setting for the study of systems of linear equations, linear transformations, and matrix operations. They enable the analysis of complex problems by breaking them down into simpler components, allowing for a deeper understanding and more efficient problem-solving.

| Characteristic | Importance in Linear Algebra |

|---|---|

| Closed under vector addition and scalar multiplication | Allows for the manipulation and analysis of systems of linear equations and matrices. |

| Existence of zero vector and additive inverses | Facilitates the study of linear transformations and their properties, such as kernels and ranges. |

| Concept of linear independence and basis | Underpins the representation of vectors in a vector space and the study of subspaces. |

By understanding the definition, characteristics, and importance of vector spaces in linear algebra, students and researchers can unlock the power of this fundamental mathematical concept and apply it to a wide range of real-world problems and applications.

Axiomatic Approach to Vector Spaces

In the realm of linear algebra, the axiomatic approach to vector spaces is a fundamental concept that underpins our understanding of these mathematical structures. This approach lays out a set of essential axioms, or fundamental principles, that must be satisfied for a set to be considered a vector space. By grasping this axiomatic framework, we can delve deeper into the intricate properties and applications of vector spaces and their role in linear algebra.

The axiomatic definition of a vector space is based on the following key axioms:

- Closure under vector addition: For any two vectors in the set, their sum must also be a member of the set.

- Closure under scalar multiplication: For any vector in the set and any scalar, the product of the scalar and the vector must also be a member of the set.

- Existence of a zero vector: The set must contain a unique zero vector, which, when added to any other vector, results in that vector.

- Existence of additive inverses: For every vector in the set, there must exist an additive inverse, such that their sum is the zero vector.

- Distributive properties: The operations of vector addition and scalar multiplication must satisfy specific distributive properties.

By adhering to these axiomatic principles, a set of elements can be formally recognized as a vector space, unlocking a world of possibilities in the exploration of linear algebra and its applications. This axiomatic approach serves as the foundation for understanding the intricate relationships and transformations within vector spaces, paving the way for more advanced concepts and problem-solving techniques.

“The axiomatic approach to vector spaces is the cornerstone of linear algebra, allowing us to rigorously define and analyze these fundamental mathematical structures.”

Vector Space Operations

In the world of linear algebra, vector spaces are defined by their fundamental operations – vector addition and scalar multiplication. These operations are the building blocks that allow us to manipulate and analyze vectors, unlocking a wealth of insights and applications.

Vector Addition

Vector addition is the process of combining two or more vectors to create a new vector. This operation is defined by the parallelogram law, where the sum of two vectors is represented by the diagonal of the parallelogram formed by the original vectors. Vector addition is a crucial concept in vector spaces, as it allows us to perform various calculations and transformations, such as finding the resultant of multiple forces acting on an object.

Scalar Multiplication

Scalar multiplication, on the other hand, is the operation of multiplying a vector by a scalar (a single real number). This operation scales the vector, either stretching or shrinking it, while preserving its direction. Scalar multiplication plays a vital role in vector space operations, as it enables us to adjust the magnitude of vectors without changing their orientation, allowing for more sophisticated mathematical manipulations.

These two fundamental operations, vector addition and scalar multiplication, form the core of vector space theory and are essential for understanding the behavior and applications of vector spaces in linear algebra.

“Vector spaces are the foundation upon which linear algebra is built, and the operations of vector addition and scalar multiplication are the cornerstones of this foundation.” – mathematician, John Doe

Subspaces and Their Properties

In the captivating world of linear algebra, the concept of subspaces plays a pivotal role. Subspaces are special sets within a larger vector space that possess their own unique characteristics and properties. These subspaces not only provide deeper insights into the structure of vector spaces but also pave the way for numerous applications in the fields of linear algebra and beyond.

To understand subspaces, we must first grasp the fundamental definition of a vector space. A vector space is a set of elements, called vectors, that can be added and multiplied by scalars while obeying certain rules. Subspaces, then, are the subsets of a vector space that inherit these same rules and properties, forming their own distinct mathematical structures within the larger space.

The defining characteristics of a subspace are as follows:

- Closed under addition: If two vectors in the subspace are added, the result must also be an element of the subspace.

- Closed under scalar multiplication: If a vector in the subspace is multiplied by a scalar, the result must also be an element of the subspace.

These properties ensure that subspaces maintain the same fundamental operations as the original vector space, allowing for a seamless integration and analysis within the larger mathematical framework.

Exploring the properties of subspaces sheds light on the intricate structure of vector spaces, paving the way for deeper understanding and more sophisticated applications in linear algebra. By mastering the concept of subspaces, students and researchers alike can unlock new avenues for groundbreaking discoveries and innovative solutions in various scientific and engineering disciplines.

Linear Combinations and Spanning Sets

In the realm of vector spaces, the concepts of linear combinations and spanning sets play a crucial role in understanding the structure and properties of these mathematical constructs. Let’s delve into these fundamental ideas and explore their significance in linear algebra.

Span and Basis

The span of a set of vectors refers to the collection of all possible linear combinations of those vectors. In other words, the span represents the set of all vectors that can be expressed as a linear combination of the given vectors. This notion of span is essential in determining the basis of a vector space, which is a linearly independent set of vectors that can “span” the entire vector space.

A basis is a special set of vectors that can be used to represent any vector in a vector space as a unique linear combination of the basis vectors. The basis of a vector space is the smallest set of linearly independent vectors that can generate all the vectors in the space through linear combinations.

Understanding the span and basis of a vector space is crucial for analyzing its structure and determining how it can be represented and manipulated. These concepts provide a fundamental framework for working with linear combinations and spanning sets, which are essential building blocks of vector spaces.

“The span of a set of vectors is the set of all possible linear combinations of those vectors.”

Mastering the concepts of linear combinations, spanning sets, span, and basis is vital for a deep comprehension of vector spaces and their applications in various fields, including computer graphics, quantum mechanics, and beyond.

Vector Spaces and Linear Transformations

Exploring the intricate relationship between vector spaces and linear transformations is a crucial aspect of understanding linear algebra. These two fundamental concepts are deeply intertwined, with vector spaces providing the foundation upon which linear transformations operate. By delving into this symbiotic relationship, we can unlock a deeper appreciation for the power and versatility of linear algebra in various real-world applications.

A linear transformation is a function that maps vectors within a vector space to other vectors, while preserving the essential properties of vector addition and scalar multiplication. In other words, linear transformations transform one vector space into another, often revealing hidden patterns and connections that can be leveraged for problem-solving and analysis.

The study of vector spaces and linear transformations is particularly crucial in fields such as computer graphics, quantum mechanics, and data analysis, where the ability to manipulate and transform vector spaces is central to generating realistic images, modeling complex physical phenomena, and extracting meaningful insights from large datasets.

| Characteristic | Description |

|---|---|

| Linearity | Linear transformations preserve the linear structure of vector spaces, ensuring that the transformed vectors maintain the properties of vector addition and scalar multiplication. |

| Representation | Linear transformations can be represented using matrices, which provide a convenient and powerful way to perform vector-valued operations and transformations. |

| Subspaces | Linear transformations map subspaces of the original vector space to subspaces of the transformed vector space, allowing for the analysis of the structure and properties of the transformed space. |

By understanding the interplay between vector spaces and linear transformations, students and researchers can unlock new avenues for exploration and problem-solving in the vast and ever-evolving field of linear algebra.

“The study of vector spaces and linear transformations is not only a powerful tool in mathematics, but also an essential foundation for many practical applications in science and engineering.”

Vector Spaces in Real-World Applications

Vector spaces, a fundamental concept in linear algebra, find numerous real-world applications that extend beyond the realm of pure mathematics. Two prime examples of such practical implementations are in the fields of computer graphics and quantum mechanics.

Computer Graphics

In the world of computer graphics, vector spaces play a crucial role in the representation and manipulation of digital images, 3D models, and animations. The properties of vector spaces, such as linearity and transformations, enable the efficient storage, processing, and rendering of complex visual data. By encoding geometric shapes, colors, and textures as vectors, computer graphics algorithms can perform operations like scaling, rotation, and projection with ease, allowing for the creation of stunning visual effects and realistic simulations.

Quantum Mechanics

In the realm of quantum mechanics, vector spaces serve as the mathematical foundation for the description of quantum systems. Quantum states are represented as vectors in a complex vector space, known as a Hilbert space. The unique properties of these vector spaces, such as superposition and entanglement, underpin the counterintuitive phenomena observed in quantum mechanics, which have revolutionized our understanding of the fundamental nature of the universe.

These real-world applications of vector spaces demonstrate the profound impact that this branch of linear algebra has on fields as diverse as computer graphics and quantum mechanics. The versatility and power of vector spaces continue to shape our technological advancements and our scientific understanding of the world around us.

| Application | Relevance of Vector Spaces | Key Concepts |

|---|---|---|

| Computer Graphics | Efficient representation and manipulation of visual data |

|

| Quantum Mechanics | Mathematical foundation for describing quantum systems |

|

“The versatility and power of vector spaces continue to shape our technological advancements and our scientific understanding of the world around us.”

Vector Spaces and Matrices

In the realm of linear algebra, vector spaces and matrices are intricately connected, each playing a vital role in understanding and manipulating complex mathematical structures. Mastering the relationship between these two foundational concepts is essential for practitioners working in diverse fields, from computer graphics to quantum mechanics.

Matrices, which are rectangular arrays of numbers, scalars, or mathematical expressions, can be viewed as representations of linear transformations within a vector space. These transformations map vectors from one vector space to another, allowing for the manipulation and analysis of data in a concise and efficient manner. By understanding how matrices operate on vector spaces, researchers and analysts can unlock powerful insights and solutions to a wide range of problems.

Furthermore, the properties of vector spaces, such as linearity and span, are closely linked to the properties of matrices, such as rank, determinant, and eigenvalues. Delving into the interplay between these concepts empowers users to leverage the strengths of both vector spaces and matrices to tackle complex challenges, optimize processes, and uncover hidden patterns in data.

FAQ

What is a vector space?

A vector space is a mathematical structure that consists of a set of elements called vectors, and two operations: vector addition and scalar multiplication. Vector spaces have a rich set of properties and axioms that define their structure and behavior.

Why are vector spaces important in linear algebra?

Vector spaces are fundamental to the study of linear algebra, as they provide a framework for understanding and working with linear transformations, matrices, and a variety of other linear algebra concepts. They form the backbone of many algorithms and applications in fields such as computer science, physics, and engineering.

What is the axiomatic approach to defining vector spaces?

The axiomatic approach to defining vector spaces involves specifying a set of axioms, or basic watitoto rules, that must be satisfied for a set of elements to be considered a vector space. These axioms cover the properties of vector addition and scalar multiplication, ensuring a consistent and well-defined mathematical structure.

What are the main operations in vector spaces?

The two main operations in vector spaces are vector addition and scalar multiplication. Vector addition allows for the combination of two vectors, while scalar multiplication involves multiplying a vector by a scalar (a single number).

What are subspaces, and how are they defined?

Subspaces are vector spaces that are contained within a larger vector space. A subspace is a subset of the original vector space that satisfies the same vector space axioms, and it inherits the structure and properties of the larger vector space.

What are linear combinations and spanning sets in vector spaces?

Linear combinations are the sum of scalar multiples of vectors, and they play a crucial role in understanding the structure of vector spaces. Spanning sets are collections of vectors that can be used to express any vector in the vector space as a linear combination of the set’s elements.

Vector spaces and linear transformations are closely linked in linear algebra. Linear transformations are functions that preserve the vector space structure, mapping vectors in one vector space to vectors in another vector space (or the same vector space) in a way that respects the operations of vector addition and scalar multiplication.

What are some real-world applications of vector spaces?

Vector spaces have numerous applications in various fields, including computer graphics (where they are used to represent and transform geometric objects), quantum mechanics (where they model the state of quantum systems), and many other areas of science and engineering that rely on linear algebra.

Vector spaces and matrices are intimately connected in linear algebra. Matrices can be used to represent linear transformations between vector spaces, and the properties of vector spaces can be studied through the lens of matrix operations and representations.